Too Much Is Just Too Much

We all want to melt metal quickly in our foundries, but a high fire rate with your furnace burners doesn’t necessarily improve your melt rate. When we over-fire the furnace, energy efficiency is extremely poor and melt loss can be worse.

In practice, you can sometimes see this over-firing. Flames can be seen going up the flue or coming out the doors causing extra maintenance. However, the furnace may be over-fired even without those signs. Furnace designers put in a safety factor in the burners. They want to ensure the melt rate meets design. However, that safety factor can cause less than optimum energy efficiency. In an analysis of over 100 aluminum furnaces, it was found the original fire rate for 80% of the furnaces exceeded the optimum level. Holding furnaces also showed signs of over-firing.

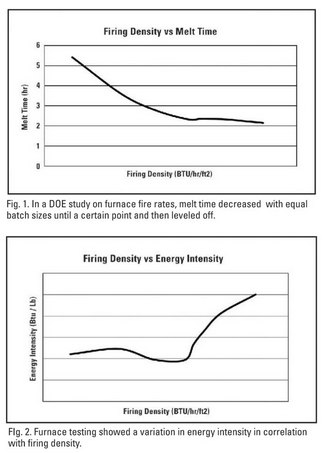

A Department of Energy study was done to look at fire rates in an aluminum furnace. Computer modeling along with corresponding tests using a model furnace showed similar results. The burner fire rate was increased with equal batch sizes in a furnace (Fig 1). Melt time of the charge decreased until a certain point. After that point, the improvement in melt time was minimal.

At the same time, energy intensity was studied (Fig 2). The tests showed some variation at lower fire rates but at a certain point the energy intensity greatly increased (worsened). As the fire rate increased, the residence time of the products of combustion decreased so all the energy was not transferred to the walls or the metal. More energy was going into the furnace but this extra energy was not going into the metal and was instead going up the flue.

Multiple trials were run in real-world furnaces. At one company, the fire rate was reduced in nine different furnaces. The savings ranged from 5-15% with an average of 10%. In other tests with different designs of furnaces, the savings ranged from 14%-15%. Plus, the change was essentially free!

The optimum fire rate depends on the type and method that metal is charged and the furnace design. For instance, a flat bath requires a lower fire rate versus a charge of metal in a mound. The best way to find the optimum level is through testing.

Ideally, controlled tests could be run on a furnace with different fire rates that monitor melt rate and energy intensity to optimize the system. However, in the real world, just making a change seems to work best. A good start would be to decrease the fire rate by 10%. Keep at that fire rate for several weeks while monitoring production and energy use. If that works, step down to a lower fire rate and continue monitoring performance. Once you have gone too far (melt rate has decreased), go back to the last working fire rate.

It can be better to let the department head know about the tests but not the furnace operators. Many times the fire rate will be blamed for any and all furnace problems. If a real problem develops, the department head will know to increase fire rate.

Common practice tells us to push all the heat we can into a furnace. However, optimizing the burner fire rate can save thousands of dollars in a year while costing the company nothing.

Click here to see this story as it appears in the July 2019 issue of Modern Casting.